반응형

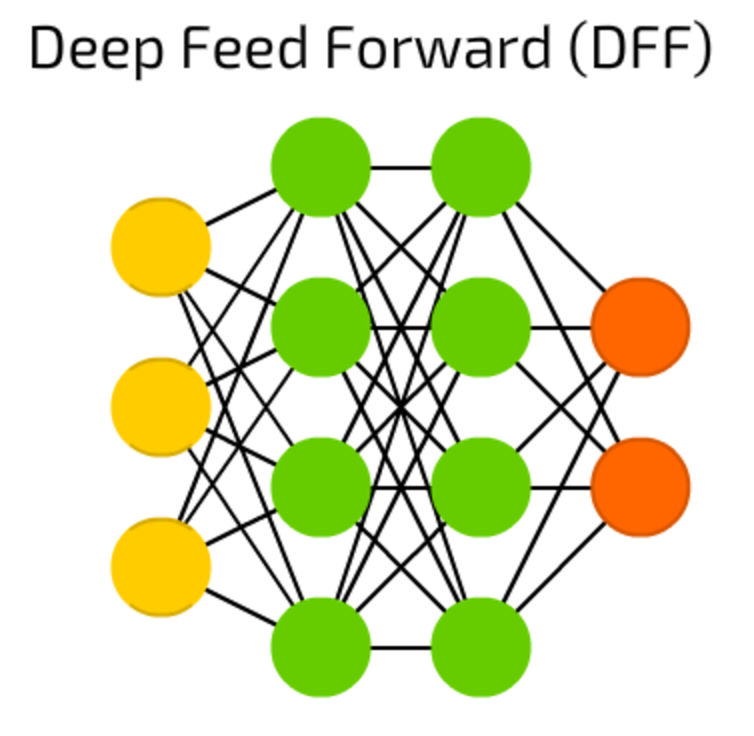

3input : 4 hidden 1'st layer : 3 hidden 2'nd layer : 1 output layer

module NeuralNetwork(input clk,

input [2:0] inputs,

output reg [0:0] output);

reg [3:0] hidden_neurons_layer1;

reg [2:0] hidden_neurons_layer2;

reg [0:0] output_neuron;

// weights for inputs to first hidden layer

parameter w0 = 0.3;

parameter w1 = 0.4;

parameter w2 = 0.2;

parameter w3 = 0.1;

// weights for first hidden layer to second hidden layer

parameter w4 = 0.5;

parameter w5 = 0.7;

parameter w6 = 0.2;

// weights for second hidden layer to output

parameter w7 = 0.6;

parameter w8 = 0.7;

parameter w9 = 0.3;

always @(posedge clk) begin

hidden_neurons_layer1[0] <= inputs[0] * w0 + inputs[1] * w1 + inputs[2] * w2;

hidden_neurons_layer1[1] <= inputs[0] * w2 + inputs[1] * w1 + inputs[2] * w0;

hidden_neurons_layer1[2] <= inputs[0] * w3 + inputs[1] * w2 + inputs[2] * w1;

hidden_neurons_layer1[3] <= inputs[0] * w1 + inputs[1] * w2 + inputs[2] * w3;

hidden_neurons_layer2[0] <= hidden_neurons_layer1[0] * w4 + hidden_neurons_layer1[1] * w5 + hidden_neurons_layer1[2] * w6;

hidden_neurons_layer2[1] <= hidden_neurons_layer1[1] * w5 + hidden_neurons_layer1[2] * w6 + hidden_neurons_layer1[3] * w4;

hidden_neurons_layer2[2] <= hidden_neurons_layer1[2] * w6 + hidden_neurons_layer1[3] * w4 + hidden_neurons_layer1[0] * w5;

output_neuron <= hidden_neurons_layer2[0] * w7 + hidden_neurons_layer2[1] * w8 + hidden_neurons_layer2[2] * w9;

end

assign output = output_neuron;

endmodule

https://towardsdatascience.com/the-mostly-complete-chart-of-neural-networks-explained-3fb6f2367464

The mostly complete chart of Neural Networks, explained

The zoo of neural network types grows exponentially. One needs a map to navigate between many emerging architectures and approaches.

towardsdatascience.com

module DFFN(input x[3:0], output y);

reg [3:0] h1, h2;

wire [3:0] z1, z2;

// Layer 1

assign z1 = x + h1;

always @(x) h1 = (z1 > 0) ? z1 : 0;

// Layer 2

assign z2 = h1 + h2;

always @(h1) h2 = (z2 > 0) ? z2 : 0;

// Output layer

assign y = h2[3];

endmodule반응형

'하드웨어 > Verilog-NN' 카테고리의 다른 글

| Verilog-NN : Long Short Term Memory : LSTM (0) | 2023.02.09 |

|---|---|

| Verilog-NN : Recurrent Neural Network : RNN (0) | 2023.02.09 |

| Verilog-NN : Radial Basis Network, RBF (0) | 2023.02.09 |

| Verilog - NN : Feed Forward (0) | 2023.02.09 |

| Verilog-NN : Perceptron 퍼셉트론 (0) | 2023.02.09 |